Producing SEO-friendly high-value content on the web is one of the best ways of organically attracting visitors to your site and enhancing your brand reach. The SEO-friendly content helps you rank higher in search engine rankings which eventually helps generate traffic (lead generation) on your website and can also help increase your revenue.

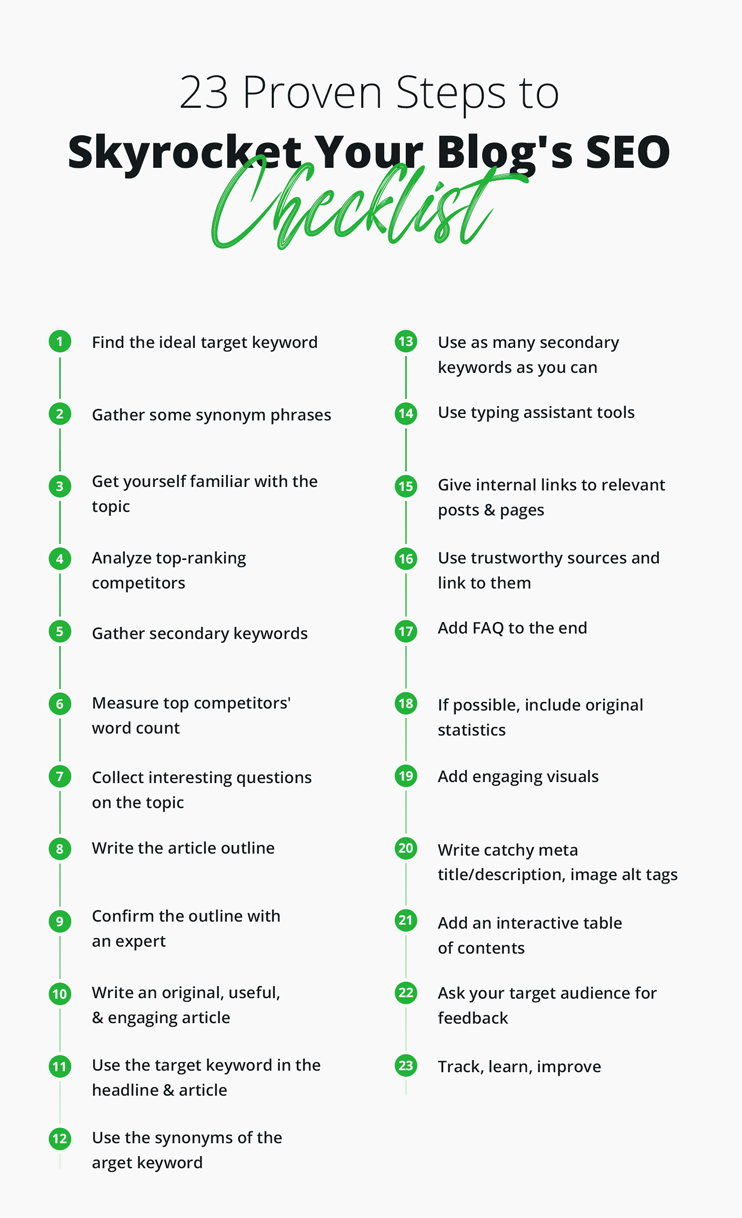

This article will provide a comprehensive 23-Step Blog Post SEO Checklist to help you write better quality content that will work like a magnet that attracts organic traffic. We’ll cover everything from keyword research and meta tags, to linking and image optimization. With this guide, you will be able to create high-value content that is optimized for success with search engine algorithms.

In This Article

- The Misunderstanding About SEO Content and Its Actual Purpose

- 23 Proven Steps to Skyrocket Your Blog’s SEO [Checklist]

- 1. Find the Ideal Target Keyword for the Selected Topic

- 2. Gather Some Synonym Phrases for the Target Keyword

- 3. Get Yourself Well Familiar With the Topic

- 4. Analyze Top-Ranking Competitors for the Target Keyword

- 5. Gather Secondary Keywords

- 6. Measure the Word Count for the Top Competitors

- 7. Read Reddit and Quora Threads if Available and Collect Interesting Questions

- 8. Write the Article Outline With All the Information You Already Have

- 9. Confirm the Outline With a Subject Matter Expert if You’re Not One

- 10. Write an Article That Is Original, Easy to Read, Useful and Engaging

- 11. Use the Target Keyword in the Headline and Several Times Throughout the Article

- 12. Use the Synonyms of the Target Keywords to Keep the Read Natural

- 13. Use as Many of the Secondary Keywords as You Can When Writing Related Sections

- 14. Use Grammarly, Hemingway, and SurferSEO to Avoid Imperfections

- 15. Give Internal Links to Relevant Blog Posts and Pages

- 16. Use Trustworthy Sources for Backing Your Points and Link the Original Sources

- 17. Add FAQ to the End, to Answer Specific Questions Found in Communities

- 18. If Possible, Include Original Statistics

- 19. Add Visuals That Make the Content More Appealing and Easy to Skim

- 20. Don’t Forget to Write Catchy Meta Title, Description and Descriptive Image Alt Tags

- 21. Add an Interactive Table of Contents to Improve the Navigation

- 22. Find Someone From the Target Audience and Ask For Feedback

- 23. Track, Learn, Improve

- 7 Common SEO Mistakes in Digital Content Production

- 1. Recycling the Competitors’ Content

- 2. Not Engaging a Subject Expert When Writing About Things You Don’t Practically Know

- 3. Neglecting the Secondary and LSI Keywords

- 4. Overusing AI Content Creators

- 5. Not Dedicating Enough Effort to Meta Title and Description

- 6. Not Linking to External Sources

- 7. Downgrading the Importance of High-Quality Visuals

- Conclusion

- FAQ

The Misunderstanding About SEO Content and Its Actual Purpose

A lot of people think that just focusing on SEO keywords and writing low-quality articles around it would rank their website at the top of the search engines. But this is not the case. Such articles can harm your website’s ranking which is not good for your business. There are lots of such misconceptions people have about SEO including the following:

- Link building does not help the content.

- Content can be written and published on the internet without SEO optimization.

- If you are targeting the right keyword then the content quality does not matter.

- Using as many keywords as possible to rank content for SEO and many more.

Ultimately the search engines focus on all aspects of the content like its quality, the entire flow of the article, content, keywords, link building, etc., and based on all such parameters they rank your content in the search results. All of such things need to be taken care of to create SEO friendly content. And this is why understanding how to optimize content for SEO is crucial and that’s what we will be learning in the next section.

You don’t have to have a strong knowledge of SEO to understand all of these things. A blog post SEO checklist also known as a content SEO checklist will help you to write well SEO optimized content and to gain organic traffic to your website.

23 Proven Steps to Skyrocket Your Blog’s SEO [Checklist]

The way the SEO content template is structured that helps develop your article plays a vital role. Let’s see what are the 23 proven steps from the blog post SEO checklist that you can follow to enhance your blog’s SEO and rank your blog post at the top in search results.

1. Find the Ideal Target Keyword for the Selected Topic

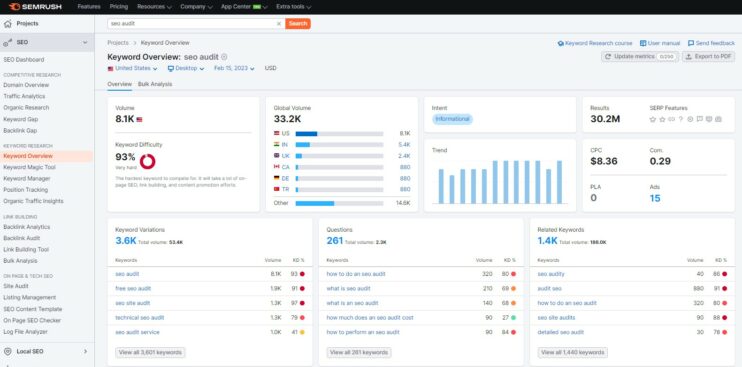

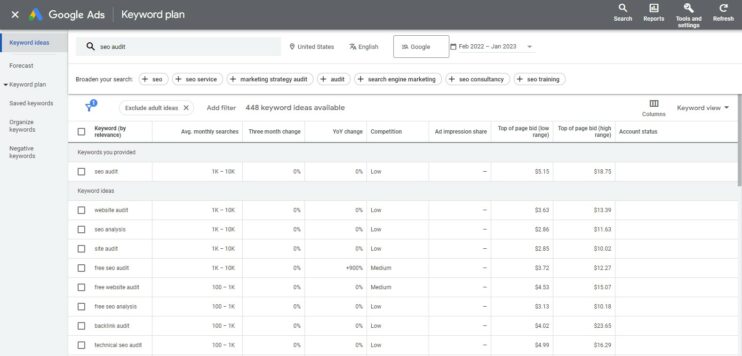

Finding the target keyword is the first step in the blog post SEO checklist. The ideal target keyword is the main keyword around which you should write and optimize your blog post. To find keywords for your article, you can use tools like Keyword Planner, Ahrefs or SEMrush. You just have to open these tools in your browser and search for words related to the topic on which you are about to write an article.

For example, if you are writing an article about “seo audit checklist” then you can search for “seo audit” and then these tools will give you all the keywords related to this query. It’s recommended to find the keywords that describe your topic, and see which ones are less competitive and searched more.

It’s important to have a target keyword so Google can understand exactly what your main topic is about. You can optimize your article around one target keyword, but sometimes you can also use two target keywords as well.

2. Gather Some Synonym Phrases for the Target Keyword

Now that you have figured out the target keyword for your article, you also have to create synonym phrases for the target keyword.

The synonym phrases have the same meaning as that of the target keyword but their wording is different. For example, if your target keyword is blog post SEO checklist then your synonym phrase can be SEO checklist for blog posts.

You can have various synonym phrases throughout your article. You can use various SEO tools like Google Keyword Planner to find target keywords and synonym phrases. The purpose is to use the synonyms interchangeably with the main keyword not to repeat the target keyword unnaturally.

It helps make the article reading flow much more naturally to the user.

3. Get Yourself Well Familiar With the Topic

This step of the SEO content template is crucial. Being familiar with the topic on which you are writing an article and having a thorough understanding of it is important. People are always searching for well-written and accurate content. If your article is not providing the correct information about the topic that you are covering then it gives a bad impression to the user who is reading your article and he/she may not even visit your site the next time.

Always remember the line “Content is the King”. If you are well familiar with the topic then it can help you write better and more accurate articles. Those articles that are rich in content are probably the ones that are ranked higher by the search engines like Google.

4. Analyze Top-Ranking Competitors for the Target Keyword

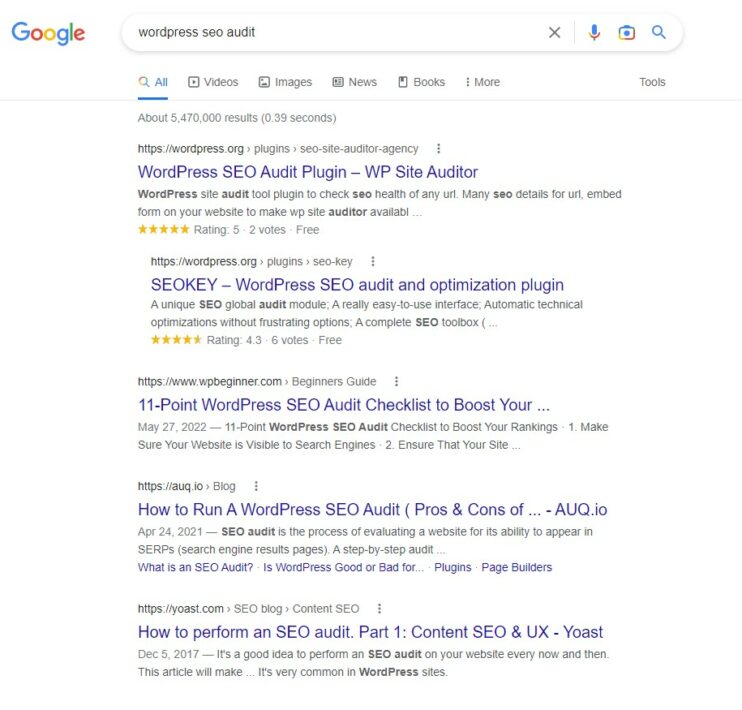

When researching a specific topic for your blog post, focus on what the competitors are doing. It is a great way of learning and improving yourself.

Look at what are the top-ranking target keywords on which they are optimizing their blog post and note it down. And based on your research decide on the target keyword on which you think you should write an article.

Apart from analyzing the keywords of the competitors you should also focus on how they have structured their articles, how they are engaging their users by using appropriate visuals, the style of writing an article, etc.

5. Gather Secondary Keywords

Secondary keywords go hand in hand with the primary keywords but are more specific. You can have multiple secondary keywords. They are more specific and so they have low search volume. They typically have three or more words. They are also great hints about the article’s outline.

To gather secondary keywords you can use SEO keyword optimization tools such as Google Keyword Planner Tool, Ahrefs, SEMrush, Keywordtool.io, etc.

6. Measure the Word Count for the Top Competitors

As a general trend, nowadays, most people generally don’t like reading long content. Writing a long-form article that is not interesting enough to read will increase the bounce rate on your site which will affect your site’s SEO.

But the long-form content that covers the topic comprehensively and touches on all the pain points the reader might have about the topic is important for search engines like Google no matter it will be 1500 words or 3000. As it helps the search engines to figure out whether the content will be helpful to the user or not. So focus on providing the best value and you’ll reach the necessary word count.

If you are still not sure about the word count for your article then try to look for best ranking articles that are written by top competitors on the same topic on which you are about to write an article. This will give you a clear idea of how lengthy your article should be.

7. Read Reddit and Quora Threads if Available and Collect Interesting Questions

Reading questions posted on community platforms like Reddit and Quora will give you an understanding of what are the problems that people are facing. Then try to make a note of interesting questions and curate your blog post content around those questions by following the blog post SEO checklist.

8. Write the Article Outline With All the Information You Already Have

After you have done a good amount of research about the article topic and before starting to write an article with the blog post SEO checklist, always create an outline of the sections that will be present in your article. This will give you the gist of what will be the flow of the article.

9. Confirm the Outline With a Subject Matter Expert if You’re Not One

If you are not confident about the outline then try to confirm your outline with a subject matter expert and work on the inputs provided by them. This is important as the entire article will be written based on this outline. The user should not feel disconnected while writing your article because of incorrect sections or improper position of sections in the outline.

10. Write an Article That Is Original, Easy to Read, Useful and Engaging

Generating unique content is important for SEO. Do your research, and get a thorough understanding of the topic on which you are writing an article. Make sure that the article is easy to read and does not have a lot of jargon words. Only use jargon words wherever necessary. The entire article should be structured in such a way that it should keep the reader engaged providing higher value. You can use ChatGPT -based AI blog writers to avoid writer’s block.

11. Use the Target Keyword in the Headline and Several Times Throughout the Article

Since you always have to have a single target keyword for your article and you are optimizing the entire article around this keyword, therefore you should use this keyword at multiple places throughout the article.

The target keyword must be added at the following places:

- In the H1 heading

- Any one of the H2 headings

- In the intro section (in the first 100 words)

- In the conclusion (in the last 100 words)

12. Use the Synonyms of the Target Keywords to Keep the Read Natural

The synonyms of the target keywords have the same meaning as that of the target keyword but their wording is different. You must try to use the synonyms of the target keywords wherever possible to keep the user in the reading flow. This is one of the SEO content writing best practices that will help in SEO content optimization.

13. Use as Many of the Secondary Keywords as You Can When Writing Related Sections

Since secondary keywords are more specific they must be used multiple times within the article which can help you rank even higher on the search engine results page with more keywords. When using secondary keywords, it is important to ensure they are relevant and accurately reflect the primary topic being discussed in the article.

Using secondary keywords allows you to create content that targets different audiences who may be searching for similar terms or topics. For example, if your primary keyword is “home office setup”, then some related secondary keywords could include “desk setup”, “ergonomic chair” or “how to organize my workspace”.

14. Use Grammarly, Hemingway, and SurferSEO to Avoid Imperfections

Whenever you write any content the grammar and structure of the sentences within that content should be appropriately written, so that it can make sense to the user while he is reading your content. There are various cool online resources that you can use such as Grammarly, Hemingway, and SurferSEO that can help you to write better quality articles free from grammatical mistakes and avoid imperfections in your writing.

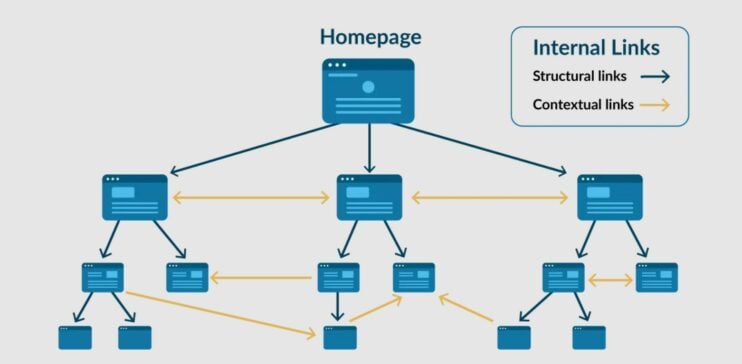

15. Give Internal Links to Relevant Blog Posts and Pages

Let’s say you are writing an article and some of the important concepts that you have used in this article have already been discussed in detail in some other article then in that case you can create an internal link from this article to that article.

This will help you to spread the traffic coming from one of your articles to various other articles through internal linking. Make sure that the user presses on the link it should open in a new tab, instead of the current tab, so that the user remains engaged in reading the article.

Internal linking also helps search engines understand that you have covered more topics about the phenomenon and count it as an authority on the topic.

16. Use Trustworthy Sources for Backing Your Points and Link the Original Sources

Whenever you try to make a statement in your article, always try to make sure that it’s accurate and it has proof supporting it. In this case, you can link to trustworthy sources for backing your points.

For example, If you are claiming that so and so medicine can cure so and so disease then you should back your point by linking this statement with an appropriate source. In this case, some Healthcare institutions might have done some research on this topic so you can link it with their research paper or something.

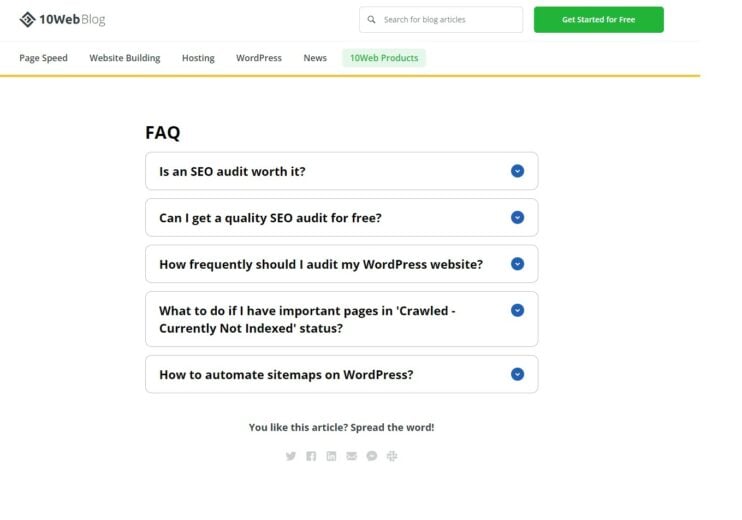

17. Add FAQ to the End, to Answer Specific Questions Found in Communities

Once the user has completed reading the blog post the users might have questions regarding various things covered in the article. So, having an FAQ section at the end of each blog post that covers all the top commonly asked questions in various other communities that a user might need an answer to would be great.

This will eventually help the user to get more clarity on the topic. And even users who are looking for answers to such questions could land on your site.

18. If Possible, Include Original Statistics

Adding statistical data and numbers to your article is an appreciable choice. Such type of data usually attracts backlinks and gives a more trustworthy impression. Therefore, such type of content is ranked higher in search engines.

19. Add Visuals That Make the Content More Appealing and Easy to Skim

Visuals that you use on your blog post like images should be of high quality. While the user is reading your article, the visuals should keep the user engaged with the content. Also, use proper and accurate visuals to help users understand the information that you are trying to explain via your blog post.

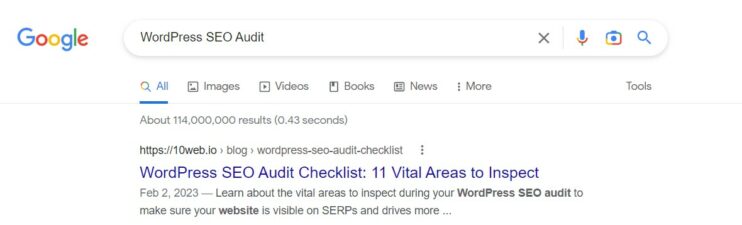

20. Don’t Forget to Write Catchy Meta Title, Description and Descriptive Image Alt Tags

The meta title and description are the two most essential components of any blog post. When a search engine displays the search results the meta title and description are what the user first sees. If it feels appealing to the user then only the user will click on the link and land on your blog post.

Apart from this, descriptive alt tags also play a vital role. If in case the images are not loaded on the client’s browser then with the help of descriptive image alt tags the client can figure out what the image was all about. So, it is always recommended to add descriptive alt tags on each image within your blog post. In this way, you might also secure some good image SEO rankings and traffic.

21. Add an Interactive Table of Contents to Improve the Navigation

The table of contents makes it easier for a user to navigate within your article. Each of your articles must have a dedicated table of contents. Whatever content you will put in your article outline should be available inside the table of contents. This provides an ease to the user and keeps him engaged while reading the article.

22. Find Someone From the Target Audience and Ask For Feedback

Always try to take feedback from the users who are visiting your site or from your friends and family members who read your blog post. This will help you in identifying weaknesses in your writing which will eventually help you in writing better and high-value blog posts as you move forward in your digital content production journey.

23. Track, Learn, Improve

This is the final SEO content optimization tip. The SEO content checker or the SEO content optimization tools like Google Analytics, Google Search Console, Bing Webmasters, etc. checks the SEO performance of the article.

Using Google Analytics you can track the daily activity happening on your website like the number of visitors & countries from which they belong, bounce rate, session duration, the source of the traffic (organic search, direct, social, etc) and devices from which they are accessing your site and a lot more things. It also gives you the ability to track what pages / URLs your users visit.

On the other hand, Google Search Console will help you in understanding how users are reacting to your article or content when it is presented to them in search results by the Google search engine. It will give you a lot of data related to total impressions, total clicks, average Click Through Rate (CTR), the search query for which the article was ranked, etc.

After analyzing this data, look for the mistakes that you have made and improve the things that are affecting your article’s SEO so that your article becomes much more optimized and ranks higher in search results.

7 Common SEO Mistakes in Digital Content Production

1. Recycling the Competitors’ Content

Most people who instantly want to gain traffic and want to earn a few bucks look for various shortcuts. And the most common SEO mistake they do in digital content production is to copy and paraphrase the content from the competitors’ websites. In case you are among those, then stop doing it right now.

Even if the plagiarism tools won’t find it as duplicate content, you hardly can provide real value with this practice.

2. Not Engaging a Subject Expert When Writing About Things You Don’t Practically Know

There are various things that we humans don’t know, but we must always be curious to learn. If you are writing an article on a subject that you are not aware of or don’t have an in-depth understanding of it then consulting a subject matter expert before writing an article should be your obvious choice. Most people don’t even do this and end up creating content that promotes wrong ideas or doesn’t add any value to the existing market.

A subject expert will help you gain knowledge about that subject and then you would be in a better position of writing a great high quality article.

3. Neglecting the Secondary and LSI Keywords

If you use the secondary and LSI keywords in between your article then this makes the flow of the article more genuine which also gives much more clear context to the search engines to understand the content by analyzing the correlations between the words in the paragraphs and overall in the article. That’s why the writer should use the right vocabulary, and LSI keywords to make the piece more understandable for the bots.

4. Overusing AI Content Creators

Nowadays, AI technology has enhanced quite a lot and is making an impact in various fields. Using AI content creators for getting inspiration for the article, overcoming writer’s block or improving a few paragraphs would be a good idea but not for generating entire articles. This is because these AI tools are trained and tested on real-world datasets and the output that they produce is too generic and does not qualify for quality content.

So, when you tell AI creators to write a piece of content for you on a specific topic then they try to write on that topic based on the amount of knowledge that they have about that topic. If many people are using the same tool to write their article (which is practically true for many AI creators) then it can cause generating similar content for many users on the same topic which will result in duplication of content.

5. Not Dedicating Enough Effort to Meta Title and Description

Your meta title and the description should seem catchy, appealing and informative enough to the searcher so they click the result on the search results page. It does not matter how good your blog post is if the user does not feel like clicking on it. But most people don’t focus on this part of the blog post and so they receive less traffic on their site.

6. Not Linking to External Sources

Not linking to external sources gives an impression to the search engines that the facts presented in your article do not prove their accuracy and it cannot determine its trustworthiness. Such content is considered less SEO-friendly content by search engines and can result in low SEO rankings.

Also, you should not link to any external source, the external source must be of high quality, and authoritative.

7. Downgrading the Importance of High-Quality Visuals

This is the final step in our blog post SEO checklist. Visuals you use on your blog post like images should be of high quality. Many websites on the internet give less importance to the visuals and even if they use any visuals then sometimes they do not relate to the content of the blog post.

While the user is reading your article, the visuals should keep the user engaged with the content. Also, use proper and accurate visuals to help users understand the information that you are sharing via your blog post. This is how to optimize content for SEO.

Conclusion

Writing SEO-optimized blog posts is a bit tricky. There are various things that you need to take care of to write SEO friendly content. Most of the people who publish blog posts on the web do not focus much on SEO optimization because they are not aware of SEO content writing best practices.

That’s why we have created this 23-Step blog post SEO checklist to help you write high quality content that helps your blog post rank higher in the search rankings. We have also covered what are some of the common mistakes people make while producing digital content and have provided solutions to those.

FAQ

How to avoid duplicate content?

Is Yoast SEO content analysis reliable?

Is SurferSEO content analysis the best?

Are content optimization analysis tools worth it?

Is it possible to write high-quality SEO content with AI writers?

AI technologies like ChatGPT are being trained and tested on real datasets. There is a high chance that such tools may produce similar content for many users and sometimes might not be accurate. This will hurt one of our checklist points for creating original and valuable content.

Is keyword usage in an article still a thing for SEO?

Speed up your website instantly

Speed up your website instantly

-

Automatically get 90+ PageSpeed score

-

Experience full website caching

-

Pass Core Web Vitals with ease

Automatically get 90+ PageSpeed score

Automatically get 90+ PageSpeed score

![Featured image for How to Make WooCommerce Faster [29 Tips and Tricks] article](https://10web.io/blog/wp-content/uploads/sites/2/2024/04/make_woocommerce_faster-742x416.jpg)